The Dirty Little Guide to Optimizing Your Blog for Google Hummingbird

Google has dropped its age old Core Search Algorithm and replaced it with a brand new version called “Google Hummingbird.”

But have you optimized your website or blog for this new algo? Have you changed your content marketing strategy for higher ranking of your blog posts in Google SERPs?

Even though you think your answer is “Yes,” most probably, you are “Not.” The reasons – you are not clear:

1. What is Google Hummingbird?

2. Why Google introduced it?

3. How to optimize your blog or blog posts with this new Google Hummingbird algorithm?

Continue reading… to know in-depth about Google Hummingbird, and a simple yet effective SEO strategy to optimize your blog for this algorithm.

Understanding Google Hummingbird

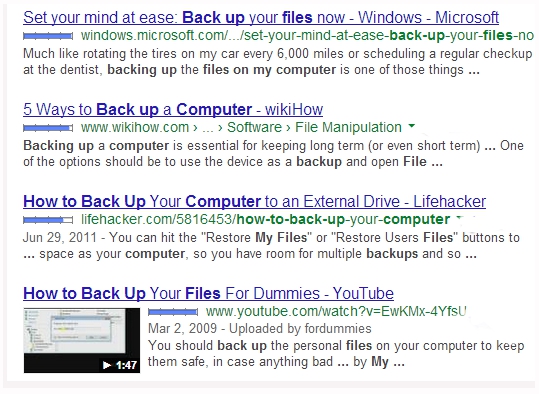

Google uses a set of search engine algorithms to index, analyze and rank web-content semantically to provide the best possible results to web-searchers.

Using these computer driven algorithms they index billions of web-pages and store them in large servers located in different parts of the world. Then analyze searcher’s query using semantic search technology. Finally, ranks different web-pages using various ranking signals. Google uses more than 200 search engine ranking signals to return the best possible results to searchers. So, indexing, analyzing and ranking are the three basic jobs of Google Search Engine.

Google Hummingbird is Google’s new Core Search Engine Algorithm to meet the search behavior of modern people, and to be the best answering engine in the world.

Query Rewriting: Back in year 2003, Google had filed a patent for query rewriting which was passed in 2011. Query rewriting is the technique to incorporate conversational search in Google.

It means – rephrasing searcher’s query implementing semantically available data to understand the actual meaning or intent. The data may be like place, device used, search history, synonyms of keywords used, and various information shared with Google by the searcher. And Google Hummingbird is better positioned to handle the query rewriting than its older counterpart.

Why Google is thinking about “Query Rewriting” now?

The change needed to be done, because people have become so reliant on Google that they now routinely enter lengthy questions into the search box instead of just a few words related to specific topics.

Amit Singhal, the head of Google’s core ranking team.

The statement speaks lot of things. It means – searchers are using long-tail queries instead of few head-terms. They usually enter their search keywords in the form of questions. That means conversational search is on rise. And most of the conversational or voice search comes from mobile devices like smartphones and tablets. To better understand the conversational search Google implemented query rewriting, and to better handle query rewriting Google Hummingbird is the right set of algorithm.

Entity Search: To return the best possible results to searchers Google has been constantly shifting its focus from few ranking signals like keywords, links and link anchor-texts. And focusing more and more on entity search or meaning search.

The reason- You can manipulate keywords, you can manipulate links and link anchor-texts, but you can’t manipulate the meaning or the theme of the web-page or article. So, Google uses query rewriting to understand the actual intent or meaning behind the search query and try to find and rank relevant web-content which actually fulfills the searcher’s needs.

In the context of search, entity means – people, places or things. Google has stored millions of structured data which are verified and validated in its knowledge graph. These data act as entity, or people, places or things. Semantic search technique uses various data like place of the searcher, device used for the search purpose, search history and behavior of the searcher and various other information shared with Google by the searcher.

By leveraging its vast knowledge graph along with semantically available data about the searcher, Google perform entity search or meaning search with the help of query rewriting. Entity search doesn’t mean Google only looks for a specific entity in their knowledge graph or in the web. It actually tries to establish a relation between the entities in the search query and find the searcher’s intent or actual needs.

For example: Suppose you do a Google search for “I want to buy an iPhone.”

“I” is an entity. “An iPhone” is an entity. And “want to buy” combines or links the other two entities to establish a meaning or intent.

Data shows – number of searches from mobile devices will surpass search performed from desktops by the end of 2014. Voice search or conversational search is gaining pace rapidly. And Google Hummingbird is the best search engine algorithm to understand meaning or intent of the searchers.

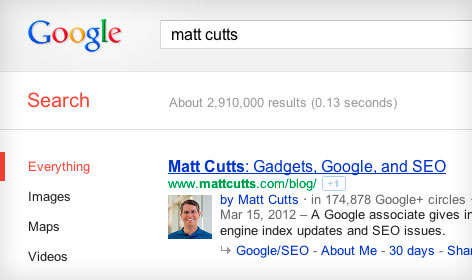

Authorship Rank: There has been lot of chatter about Google Authorship Rank since last one year or so. When you implement your authorship mark-up to a piece of content in the web, Google attributes you as the creator of the content or author of the content.

Authorship Rank acts like a double edged sword. When you create some really great content which people like to bookmark, share with their friends (via social media) and revisit again and again, then you become an authoritative publisher in the eyes of Google. That means your Authorship Rank goes up and Google ranks your content higher in search results. The opposite thing happens when you create low quality web-content or articles which don’t fulfill searchers needs.

Always keep in mind – You’ve implemented your Authorship Markup means you’re sending all possible data to Google as an author or creator of your content. Web-content with authorship markup not only get higher CTR, but also ranks high in search results. Google+ and Google Hummingbird combinedly helps Google to use Authorship Rank as a ranking signal.

Matt Cutts, the head of Google’s web-spam team hopes Authorship Rank will be explored by Google in near future. Check out the video here.

Social Signal: Before the release of Google Hummingbird, time and again, Google has said that they are not using social signals like Facebook likes, Tweets and G+ in their ranking algorithms. Matt Cutts, the head of Google’s web-spam team has personally cleared this fact at various meetings and conferences.

One argument is “Correlation doesn’t mean causation.” If a web-page is ranking high and has 100s of Facebook shares, re-tweets and Google +s; this doesn’t always mean the page is ranking because of high number of social sharing (or social sharing by influencers). It might be that more number of people found the page helpful and shared in the social media. The page may have other strong search engine ranking signals for which it ranked higher.

For example: This year there was heavy rain and maximum people were wearing Yellow rain coats. You can’t simply correlate Yellow rain coats with heavy rain. You can’t tell – there was heavy rain because maximum people were wearing Yellow rain coats.

Why Google is not using social signal for content ranking? Not because Google doesn’t like social signal; because it had technical limitations to use social signals as a ranking factor.

With Google Hummingbird Google can process social signals in a more efficient way. And in near future you will start seeing Google Plus impacting search results. So also the likes, tweets and stumbles.

- Google Hummingbird is a platform for Google to incorporate existing algorithms like Panda, Penguin etc. and also the advanced algos to be developed in the future.

Google Hummingbird Takeaways (Act Now)

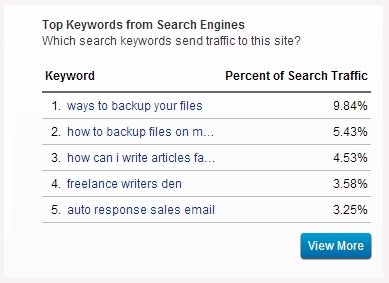

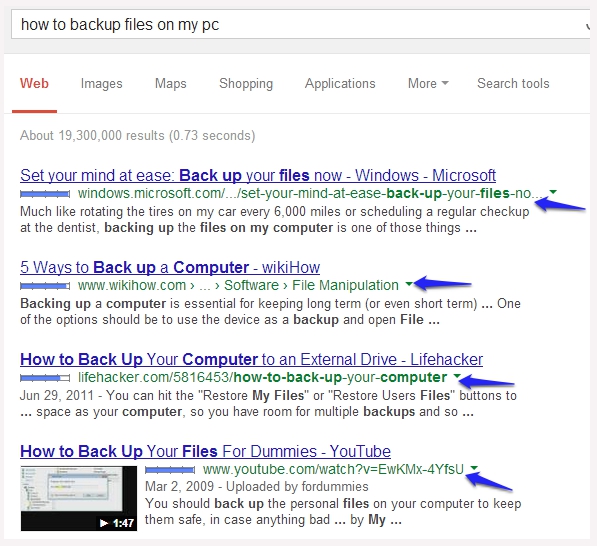

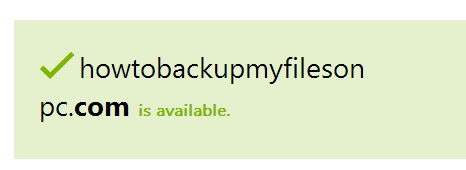

1. Keywords: Craft your content or web-pages around a theme or concept, instead of keywords. By encrypting all searches (https://) or making “keyword not provided” 100%, Google has cleared its intention. Google has gone beyond keywords.

It doesn’t mean you shouldn’t put keywords in your content which your target audience are actively searching for. But your content should answer some specific queries of your visitors or searchers.

2. Long-tail Keywords: Did Google Hummingbird kill long-tail keywords?

No. Google Hummingbird acts at query level and makes long search keywords used by searchers in to short by query rewriting. It doesn’t affect the long-tail keywords in your content while ranking. You should construct quality and meaningful content keeping long-tail keywords in mind.

3. Links: Did Google Hummingbird kill links and link anchor-texts?

No. Although the value of links and anchor-texts as a ranking signal has reduced a bit, but it will be here for long time. Page Rank (PR) still flows via links, and Google doesn’t want you solely depend on links for better ranking of your content.

4. Mobile Optimization: Your website or blog theme should be optimized for all types of mobile devices like smartphones and tablets. Basically, it should be responsive and fast loading.

If your website doesn’t offer a better mobile user experience, your rankings will drop in desktop search also, along with mobile devices.

5. Panda & Penguin: Where are Google Panda and Penguin?

Algorithms like Panda, Penguin, Top-heavy, EMD etc. which are filters or algorithmic penalties are parts of Google Hummingbird. Algorithms which will be developed in future to catch spammers or to rank web-content higher will also be parts of Google Hummingbird.

6. Author Rank: As of now Google has not declared about it officially. But be prepared for it. By introducing Google+, Google Hummingbird and Authorship Mark-up Google has made its intention clear.

Everything you create on the web, whether on your own blog or on others blog will be attributable by Google towards Authorship Rank. Spammy authors will be equally punished by reducing their AR.

7. Data Validation: Google thinks its Knowledge Graph and Wikipedia (to some extent) always provide correct facts and figures. If your article doesn’t resemble their data then it will be ranked lower. On the other hand, if you contribute Google’s Knowledge Graph by providing high quality and authentic data on your web-page, then Google will rank your content higher.

8. Social Signal: Google Plus will play a major role in coming days by providing social signals to Google for ranking content. Your brand or website should have its own Google+ page. And if you perform better, may be in future, your brand will be a part of Knowledge Graph.

Decreasing authority (sequence) of social signals will be like Google+, Twitter, Facebook etc.

9. Advanced Link Analysis: Google Hummingbird is going to fulfill Google’s long cherished wish of analyzing different kinds of links for assigning different values. Now also Google is doing it. But it will be more sophisticated in coming days.

The value of a link on the sidebar, on the footer, within the article and inside the author-box will be calculated with more accuracy.

10. User Experience: Time spent, CTR and user interaction with the web-page will be more important for ranking content with Google Hummingbird. Along with quality content, focus on your website or blog theme like navigation, readability, contrast etc. for better user experience.

Dear friends. Greetings from Google Hummingbird! ;)

Because you love to create content and always adopt White Hat SEO Techniques. With the introduction of Google Hummingbird there will be no place for spammers in the future. Bloggers and content marketers with good intention will be rewarded highly for their hard work.

This is Akash KB, signing-out from AllBloggingTips.com, till next post Happy Blogging :)

Don’t forget to shoot your comments (questions), I’ll be right here to answer them ;)